Good morning on this exciting BFCM week for ecommerce! I've been in breakfast burrito kick lately, so while trying a new one (for me) in town, I scrolled social media as is my common breakfast practice. The day before, I had seen Charley Brennand (she hosts Performance MCR, a conference in Manchester I'm speaking at in 2026!) post a list of helpful PPC things, and one of them leapt out at me. It was on testing, but not simply how to go about testing, it was a comment on the importance of ensuring everyone involved is on the same page from beginning to end regarding clear objectives.

Here is what she said:

So this morning, I was pondering this more and decided to jot down my own thoughts down, because what I think Charley is getting at here is really really crucial.

I don’t think she is simply encouraging collaboration between the tactical implementation specialists and strategic direction deciders at your org or client… she is saying more (which I completely agree with ). I think she is saying that goals and objectives of the test MUST be established before the test is created otherwise we won’t even agree on the test results or purpose… and the quiet part out loud with that is: if we don’t agree on the test results or purpose, then someone will ultimately be viewed as “messing up” and… hint, it won’t be the strategic decisions makers who take the fall here ;)

More importantly, I think this helps reveal a crucial piece of the puzzle to testing, that I don’t think many fully understand. That is:

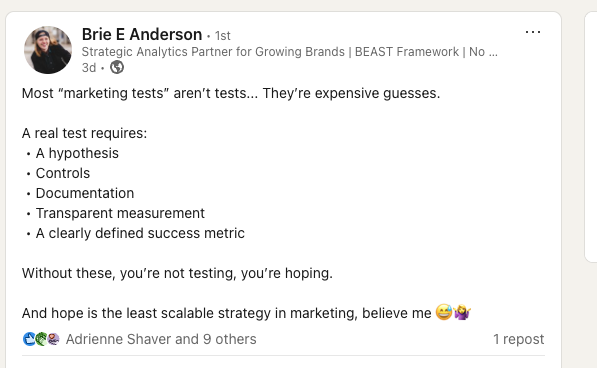

A test is called a test because there is actual risk involved.

Even in the most thought-through, well-designed, objective-focused test, there is risk. If there was no risk, it wouldn't be a test, it would be a best practice, or even a guarantee.

Get it? If you were guaranteed that something would work, there would be no point in testing, you would just implement that with the expectation that it would work.

But with testing, you may identify a new audience to try, or new creative to run in which you actually do not know how it will work (again, thus the reason for a solid testing structure). Thus, there is an unknown factor which, and this is the crucial part, means your test could fail and you could lose money on your test.

By the way, crucially, being able to actually utilize the test, even a negative outcome test, for your benefit also means you will have needed to create the test in a properly structured way. Brie Anderson does a great job noting that here:

Of course, even a negative outcome is a crucial testing discovery, and valuable because it helps guide where you *should* invest money in the future if done correctly. But this goes to risk tolerance, too few understand the need for (1) well designed testing procedures (2) the ability to lose money to refine where to spend money.

If there is no place or stomach for "risk" in your organization, or clients, it's probably time to pull back and deal with that philosophy before trying to continue with various tests.

That "pull back" may just mean that you all acknowledge "we're not in a place of testing right now because our risk tolerance is so low". That's perfectly okay, but then that means there is less discovery and prospecting happening as well, since the riskiest parts of business growth are in outreach. So frankly, THAT is risky. You are preventing your business from profitable growth (and possibly long-term survival if you never grow and inflation slowly buriesr) it could be receiving by playing too cautiously.

So, again, it's really important to identify what everyone's risk tolerance (and understanding of tests) is before just running after testing this, that, and all the stuffs.

I’d like to close by pointing you to another of my friends, Sam Tomlinson, who has this healthy whiteboard video on Google Ads A/B testing procedures:

All that to say, test… but make sure you all agree on

(1) the risk involved in a test

(2) the testing procedure so you can be confident in it

(3) the objectives of the test: how can you positively use whatever the outcome is in order to shape future testing or campaign optimizations?

What about you? Follow me on LinkedIn and toss in an opinion here. What did I get right? Wrong? Let’s discuss and grow together!

.webp)

.jpeg)

.jpg)

.jpg)